Critical Threat Intelligence & Advisory Summaries

$25 Million Lost to a Deepfake Scam — And Why Your Security Protocols Won’t Stop the Next One

Every employee followed protocol. Every control was in place. And yet AI fraud succeeded. Deepfake attacks are accelerating and your organization could be next.

In January 2024, an employee at Arup authorized $25 million in wire transfers after speaking with the company’s CFO on a video call.

- The CFO’s face looked right.

- His voice sounded right.

- Colleagues joined the call.

Everything seemed normal. It wasn’t.

Every participant on that call was an AI-generated deepfake, created using publicly available video and audio pulled from internal meetings and online conferences. The attackers didn’t hack Arup’s systems. They didn’t bypass controls.

They followed protocol.

This wasn’t a failure of awareness or judgment.

It was the collapse of a security model built on the assumption that seeing and hearing someone proves their identity.

And Arup won’t be the last organization to experience this.

Why Following the Rules Led to $25 Million Lost

The Arup employee did exactly what existing security procedures required:

- Verified identity visually

- Confirmed the voice matched

- Observed colleagues participating in the call

- Proceeded with the transfer

Perfect compliance with an obsolete security protocol.

By every traditional standard, the employee acted reasonably. They used the verification tools available and made a judgment based on sensory evidence.

And still lost $25 million.

Why?

Arup had no compensating controls in place:

- No out-of-band verification

- No dual authorization requirement

- No transaction limits triggering escalation

- No mandatory delay for high-risk transfers

There was no system designed to catch failure, only a human expected to decide correctly based on what they saw and heard.

Arup’s global CIO, Rob Greig, later summarized:

“What we have seen is that the number and sophistication of these attacks has been rising sharply in recent months.”

The data confirms this with uncomfortable clarity.

Deepfake Fraud Is Happening Faster Than You Think

The Arup case feels extreme until you look at how common AI-powered fraud has become.

Other companies, across industries, are now facing attacks that are just as sophisticated. More examples are emerging every month.

UAE Bank — $35 Million Voice Cloning Fraud

A bank manager personally knew the director whose voice was cloned. He recognized the accent, speech patterns, and cadence. Supporting emails, legal documentation, and a letter of authorization were all present.

He approved $35 million.

Every control layer was technically in place, but once the manager believed he knew who he was dealing with, the controls became irrelevant. Voice cloning fraud doesn’t bypass controls, it convinces humans to bypass them.

German CEO — The Three-Call Impersonation Scam

Fraudsters impersonated the CEO of a German parent company in three calls to a UK subsidiary executive:

- Call one: Transfer request, reassurance promised

- Call two: Follow-up confirmation

- Call three: Second payment request

The scam collapsed only when reality contradicted the deception. The promised reimbursement never arrived, yet another request came through. Shortly after, the executive received a scam call while speaking with the real CEO.

As soon as he questioned the caller’s identity, the line went dead.

China — Video Deepfake Financial Fraud ($262,000)

A finance employee saw her manager’s face on a video call and heard his voice requesting an urgent transfer. She followed the company’s standard video verification protocol.

Perfect compliance.

$262,000 lost.

KnowBe4 — A Deepfake Hiring Attack Detected in 25 Minutes

A cybersecurity firm unknowingly hired a North Korean threat actor who passed four video interviews using AI-enhanced images and stolen identities.

What saved the company wasn’t detecting the deepfake, it was layered security controls:

- Restricted system access

- Continuous SOC monitoring

- Heightened new-hire activity analysis

The attacker was identified within 25 minutes because multiple layers compensated for identity verification failures.

The Numbers Behind the Deepfake Crisis

These incidents are only the ones that became public.

The real deepfake fraud threat landscape is far larger:

- Deepfake attacks occurred once every five minutes in 2024

- Identity fraud using deepfakes surged 3,000% in 2023

- Deepfake fraud cases rose 1,740% in North America from 2022–2023

- Financial losses exceeded $200 million in Q1 2025 alone

- Businesses lost an average of nearly $500,000 per deepfake incident in 2024

- Large enterprises reported losses as high as $680,000 per incident

- CEO impersonation scams now target more than 400 companies every day

The acceleration is driven by accessibility:

- Convincing voice clones require 20–30 seconds of audio

- Real-time video deepfakes can be produced in under an hour

- Some AI models achieve 85% voice accuracy with just three seconds of audio

This is no longer niche cybercrime. It’s industrial-scale AI fraud.

Why Humans Can’t Stop Deepfakes

Human detection rates for high-quality video deepfakes sit at 24.5%.

If you’re on a video call approving a sensitive request, you have roughly a one-in-four chance of spotting deception.

AI detection tools perform better in lab environments, but real-world accuracy can drop by up to 50% against new deepfake techniques.

Meanwhile:

- ~25% of company leaders report little familiarity with deepfake technology

- 32% lack confidence their employees could recognize deepfake fraud

- 60% of organizations don’t feel prepared for AI-driven cybercrime

- Only 22% of financial institutions use AI-based fraud prevention tools

- 80% of companies lack protocols for deepfake attacks

Employees are being asked to identify threats they are statistically unlikely to recognize.

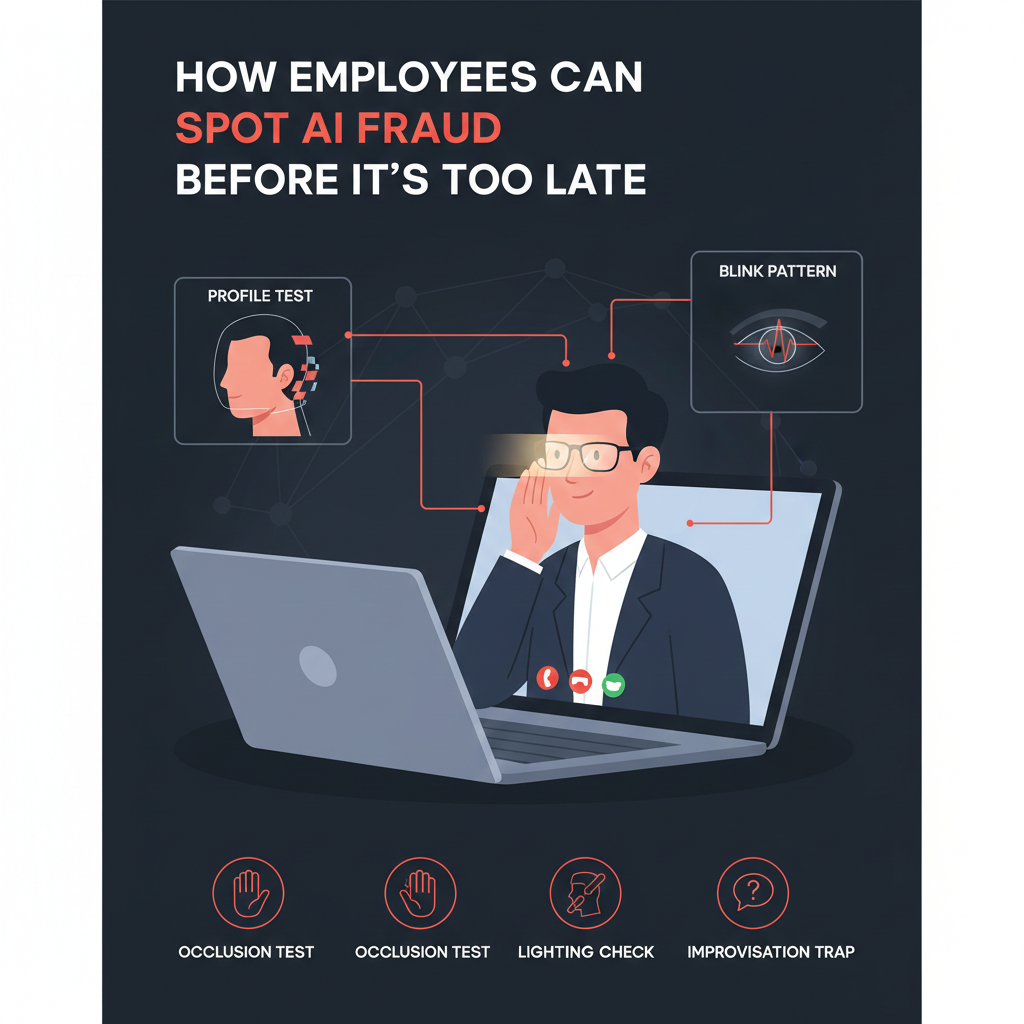

How Employees Can Spot AI Fraud Before It’s Too Late

When a video call involves financial or access-related requests, employees must move from passive observation to active disruption:

- The Profile Test: Turn the head 90°. Deepfake overlays struggle with 3D depth.

- The Occlusion Test: Move a hand or object in front of the face. Digital masks warp or flicker.

- Lighting Consistency: Watch eye reflections or glasses. Deepfakes rarely match real light sources.

- Blink Patterns: AI blinks mechanically or not at all.

- The Improvisation Trap: Ask unexpected personal questions. Latency or visual stutter can reveal synthesis.

These techniques buy time, but do not replace strong controls.

The Security Controls That Could Stop Every Deepfake Attack

Across Arup, UAE Bank, the German CEO scam, the China case, and KnowBe4, the following controls would have prevented losses:

-

Out-of-Band Verification (Easiest): Verify requests through a separate communication channel.

-

Dual Authorization (Independent): No single individual can approve high-risk transactions.

-

Transaction Limits + Mandatory Delays: Urgency is the fraudster’s weapon. Limits and delays create verification space.

-

No Human Override Policies: Protocol executes regardless of confidence or social pressure.

-

Transaction-Level MFA: Not login MFA transaction-specific verification that can’t be bypassed.

-

Cryptographic Identity Verification (Hardest): Hardware-attested video feeds, digital signatures, and cryptographic identity make deepfakes irrelevant.

The Lessons Every Organization Needs Now

The Arup incident isn’t a story about clever attackers.

It’s proof that visual and auditory verification no longer confirms identity.

Employees are operating under pre-AI assumptions. They trust their judgment. They believe protocols work. They think fraud is recognizable.

At a 24.5% detection rate, human judgment is not a control, it’s a liability.

The question is not whether your organization will face a deepfake attack.

The question is whether your controls will still function when it happens.

The framework exists.

Implementation difficulty ranges from simple to complex.

The alternative is operating in an environment where AI-powered fraud occurs every five minutes and humans lose three out of four times.

The Arup employee saw faces. Heard voices. Made reasonable decisions.

And lost $25 million.

Because the protocol was the vulnerability.

About This Article

Published: 31 January 2026

Last Updated: same as published

Reading Time: Approximately 15 minutes

Author Information

Timur Mehmet | Founder & Lead Editor

Timur is a veteran Information Security professional with a career spanning over three decades. Since the 1990s, he has led security initiatives across high-stakes sectors, including Finance, Telecommunications, Media, and Energy.

For more information including independent citations and credentials, visit our About page.

Contact:

Editorial Standards

This article adheres to Hackerstorm.com's commitment to accuracy, independence, and transparency:

- Fact-Checking: All statistics and claims are verified against primary sources and authoritative reports

- Source Transparency: Original research sources and citations are provided in the References section below

- No Conflicts of Interest: This analysis is independent and not sponsored by any vendor or organization

- Corrections Policy: We correct errors promptly and transparently. Report inaccuracies to

This email address is being protected from spambots. You need JavaScript enabled to view it.

Editorial Policy: Ethics, Non-Bias, Fact Checking and Corrections

Learn More: About Hackerstorm.com | FAQs

References and Sources

UK National Cyber Security Centre (NCSC)

- NCSC 2025 Annual Review and Prompt Injection Warning (December 2025)

- https://www.ncsc.gov.uk/

1. Adaptive Security - Arup Deepfake Scam Attack URL: https://www.adaptivesecurity.com/blog/arup-deepfake-scam-attack

2. CNN - Arup Deepfake Scam Loss (May 16, 2024) - URL: https://www.cnn.com/2024/05/16/tech/arup-deepfake-scam-loss-hong-kong-intl-hnk

3. Deepstrike - Deepfake Statistics 2025 - URL: https://deepstrike.io/blog/deepfake-statistics-2025

This article synthesizes findings from cybersecurity reports, academic research, vendor security advisories, and documented breach incidents to provide a comprehensive overview of the AI security threat landscape as of January 2026.